Find out how Azure and Team Interconnect hardware systems use Azure NetAPP files to develop chips.

High performance load (HPC) meaningful location on cloud infrastructure, requiring robust and scalable sources for processing complex and intensive computing tasks. This workload often requires a high level of parallel processing performance, usually provided by central production clusters (CPU) or GPU graphic design (GPU) based on virtual machines. In addition, HPC applications require considerable storage and fast access speed, which has caused the capabilities of traditional cloud file systems. Specialized storage solutions are required to meet the needs of input/output/output with high Thrroughput (I/O).

Microsoft Azure NetApp files are designed to provide low latency, high performance and management in the business level. The unique capacity of Azure Netap makes it a belief for several high -performance computational workloads such as electronic design automation (EDA), seismic processing, tank simulation and risks simulation. This blog emphasizes that Azure Netapi Files ” Differential ability for EDA and Microsoft’s Silicon Design Journey workload.

Requirements for EDA working load infrastructure

The EDA workload has intensive computing and data processing requirements for solving complex tasks in simulation, physical design and verification. Each design includes multiple simulations for increasing AccaIR, improving severity and detection of design defects in time, thereby reducing tuning and overworking costs. Using other simulations, silicon development engineers can test different design scenarios and optimize the performance, power and area of the chip (PPA).

EDA workload is classified into two primary types – Fonch and Backend, each with different requirements for basic storage and computational infrastructure. The frontndes load focuses on the logical design and functional aspects of chips design and consists of thousands of short -term parallel tasks with I/O pattern characterized by frequent random reading and writing across millions of small files. The workload of the backend focuses on the translation of logical design on physical design for production and consists of hundreds of tasks including sequential reading/writing less larger files.

The choice of storage solution for fulfilling this unique combination of frontal workload and backend is non -trivial. Spec consortium created Benchmark SPECT SFS to help with benchmarking of various storage solutions in the field. For EDA workload, EDA_Blendand Benchmark provides characteristic queues and backend work loads. The I/O Folder composition is described in the following table.

| Eda Worklace Facal | I/O |

| Front | Stat (39%), Access (15%), File Reading (7%), Random Reading (8%), Write File (10%), Rounded Write (15%), Other OPS (6%) |

| Backend | Read (50%), Write (50%) |

Azure NetApp Regurlast files volumes that are ideal for workload, such as databases and file systems with general purpus. EDA workload works on large volumes of data and requires very high permeability; This multiple volume multiple requirements. The introduction of large volumes to support higher amounts of data is an advantage for EDA workload because it simplifies data management and provides a super -current compared to multiple common volumes.

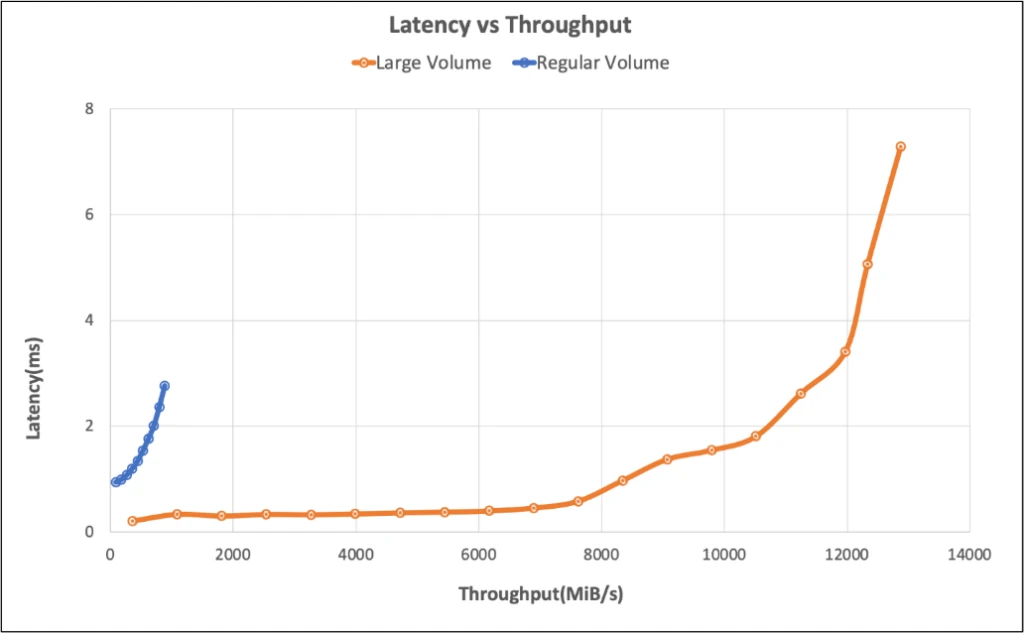

Below is the output of the power testing of the SFS EDA_Blended Benchmark specification, which shows that Azure Netapi can supply ~ 10 GIB/s throughput with less than 2 ms of latency by large volume.

Electronic design automation in Microsoft

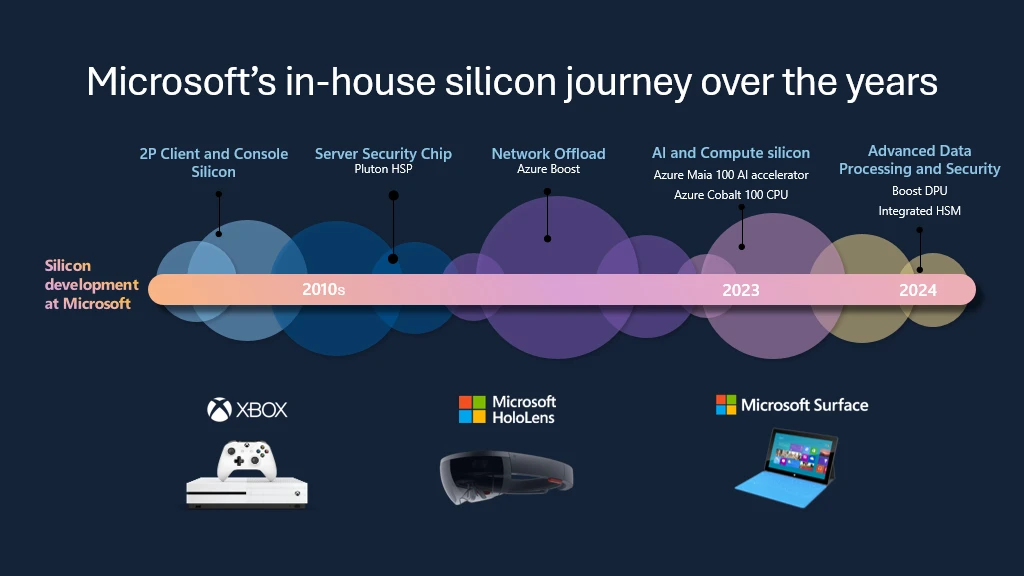

Microsoft has committed itself to allowing AI for every workload and experience for the devices today and tomorrow. It starts with the design and production of silicon. Microsoft overcomes the scientific boundaries at an unprecedented pace to run EDA workflows and shifts the limits of Moore’s law by adopting Azure for our own needs design of chips.

The use of a model of proven procedures to optimize Azure to design chips between customers, partners and suppliers was essential for the development of some first Cloud Silicon chips Microsoft:

- Azure Maia 100 Ai Aclerator, optimized for AI tasks and generative AI.

- CPU Azure Cobalt 100, ARM -based processor adapted to start a general purpose to calculate working load on Microsoft Azure.

- Azure integrated hardware Security module; Microsoft’s latest internal security chip designed to solidify key management.

- Azure Boost DPU, the first internal unit for processing a company for workload focused on high efficiency and low power data.

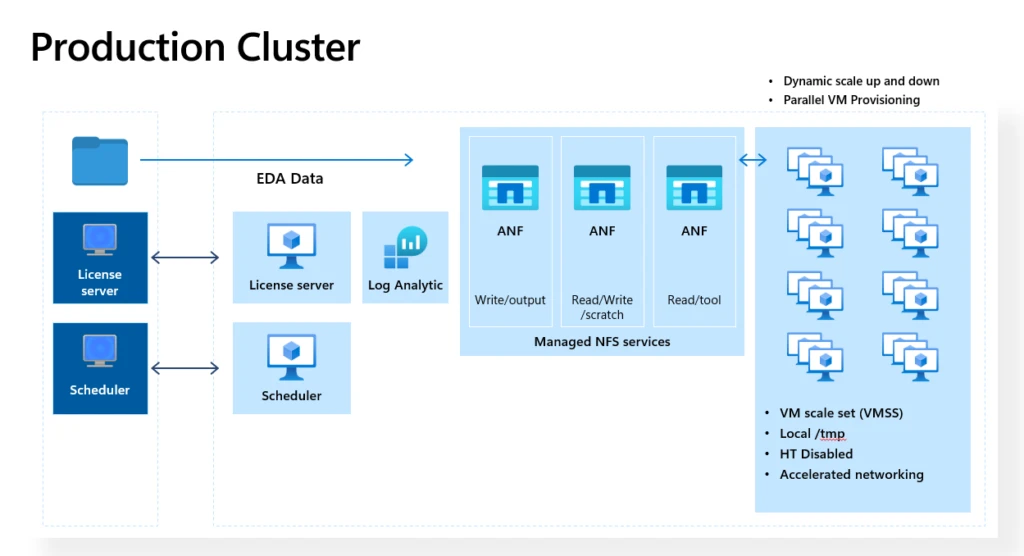

The chips developed by the Azure Cloud hardware team are deployed on Azure servers that provide the best computing capacity for HPC workload and speed up the pace of innovation, reliability and operating effectors used to develop Azure. Accept the Azure for EDA, the Azure Cloud Hardware team enjoys the following benefits:

- Quick access to scalable top processors on request.

- Dynamic pairing of each EDA tool for specific architecture.

- Use of Microsoft innovation in technologies controlled and for semiconductor workflows.

How Azure NetApp accelerates the semiconductor development of innovation

- Excellent performance: Azure NetApp files can deliver up to 652 260 IOPS with less than 2 milliseconds of latency, reaching 826,000 IOP on the edge of power (~ 7 millisecond latency).

- High scalability: As EDA projects progress, generated data can grow exponentially. Azure NetApp provides large capacities, high -performance one -time names with large volumes up to 2PIB, smoothly scaling to support computers even up to 50,000 cores.

- Simplicity: Azure NetApp files are designed for simplicity, with a convenient user experience via Azure Portal or by API automation.

- Cargo efficiency: Azure NetApp files offer great access to transparently moving cold data blocks to the Azure levels at reduced costs, and then automatically returns to the hot level when accessing. In addition, the Azure NetAP file capacity provides considerable cost savings compared to the prices of paid AS-You-go, which further reduces the high costs associated with corporate storage solutions.

- Security and revival: Azure NetAPP files provide data management, control plane and data plane security functions, ensuring that EDA critical data is protected and available with key management and encryption for rest and data in transit.

The graphics below show the EDA production cluster located in Azure in the Azure Cloud hardware team, where Azure NetApp files serve customers with more than 50,000 cores per cluster.

Azure Netap files provide scaling performance and nutrient, which must be facilitated by smooth integration with Azure for another set of electronic tools to automate design used in silicon development.

—Mike Lemus, Director, Silicon Development Compute Solutions at Microsoft.

In today’s rapidly developing world of Azure NetApp’s semiconductor design, agility, performance, security and stability-clerk files to provide silicone innovation for our Azure Cloud.

—Silvian Goldenberg, partner and CEO for design methodology and silicone infrastructure in Microsoft.

More information about Azure Netapi files

Azure NetApp files have proven to be a storage solution for the most demanding EDA workload. By providing low latency, high permeability and scalable performance of the Azure NetAP the purpose of the dynamic and complex nature of EDA tasks, which will hit rapid access to top processors and trouble -free integration into the Azure HPC solution.

Check out the Azure Well-Architect prison at Azure Netapi, where you will find detailed information and instructions.

For more information about the Azure Netapi files, see Azure NetApp files here.