In this blog, we dive into how large language models, generative AI and triangular system help us to use automation and feedback loops for more efficient incident control.

The high quality of services is essential for the spontabalability of the Azure platform and its suspension of services. Continuous monitoring of the Service Health platform allows our team to immediately reveal and mitigate the incidents that can affect our customers. In addition to automated triggers in our system that react when the thresholds are violated and the incidents of customer reports, we use operations based on artificial intelligence (AIOPS) to detect anomalies. Incident management is a complex process and may be a challenge for Azure and the team of the team involved to effectively and efficiently solve the incident with the necessary knowledge of rich domains. The way our Azure Core Insights team asked to share how they employ the triangle system using AIOPS for a quick time to solve to benefit the user environment.

– Mark Russinovich, Azure CTO in Microsoft

Optimization of incident administration

The incidents are controlled by the intended individuals (DRIs) who have the task of investigating the incidents to handle how and who must solve the incident. As our product portfolio is expanding, this process becomes increasingly complicated, because the incident has noticed against a certain service, may not be the main cause and could result from any number of dependent services. With hundreds of services in Azure, it is almost impossible for every person to have a domain knowledge in every area. This is a challenge for the efficiency of manual diagnosis, resulting in a reduction assignment and a prolonged time to alleviate (TTM). In this blog, we dive into how large language models, generative AI and triangular system help us to use automation and feedback loops for more efficient incident control.

AI agents are more mature to improve the ability to think large language models (LLM), which establishes them to articulate all the steps involved in their thought processes. Traditionally, LLM was used for generative tasks, such as a summary without using their ability to decide in the real world. For this ability, we have seen a case of use and built AI agents to make an initial decision to assign for incidents, saving time and shortening redundancy. These agents use LLM as their brain, allowing them to think, reason and use for separate performance. With better thinking models, AI agents can now plan more efficiently and overcome previous restrictions in their ability to “think” comprehensively. This approach will not only improve efficiency, but also the overall user experience by ensuring a faster solution of incidents.

Introducing a triangle system

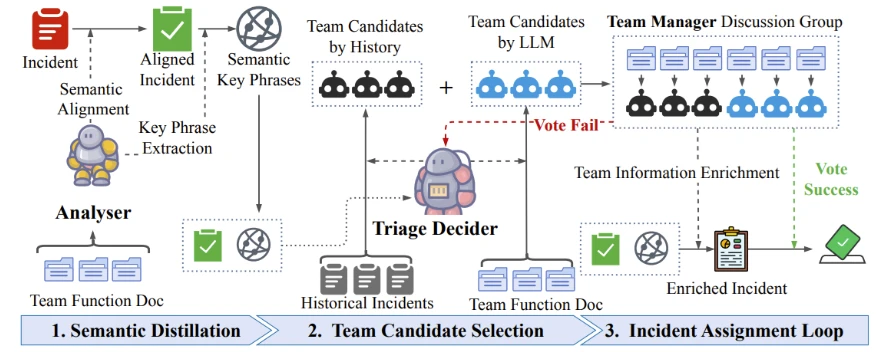

The triangle system is a framework that employs AI agents for sorting incidents. Every AI agent represents the engineers of a particular team and is coded with the domain now the team for sorting. It has two advanced functions: sorting and global sorting.

Sortm sorting of local

The local sorting system is a framework for a single agent who uses a single agent to take over each team. These individual agents provide binary decisions or reject the incident on behalf of their team on the basis of historical incidents and existing guides to solving problems (TSG). TSGS is a set of instructions that engineers documented to eliminate problems with common store patterns. These TSGs are used to train an agent to accept or reject incidents and provide justification of the decision. In addition, the agent can recommend a team to which the incident should be transferred on the basis of TSGS.

As shown in Figure 1, the local sorting system begins when the incident enters the incidental tail team. Based on training from historical incidents and TSG, the only agent uses a generative insertion of the prestressed transformation (GPT) to capture the semantic meanings of words and sentences. The semantic distillation invizova, who receive semantic information from an incident closely related to a triagal incident. The only agent then decides to accept or reject the incident. If admitted, the agent will provide justification and the incident will be handed over to the engineer for checking. If the agent is rejected, Eith will send it back to the previous team, convert it to the TSG team, or leave it in the tail to solve the engineer.

Figure 1: Local System System Workflow

The local sorting system has been in Azure production since mid -2024. Since January 2025, 6 teams have been in production with more than 15 teams in the board process. The initial results are promising, with agents to achieve 90% accuracy, and one team recorded a reduction in their TTM by 38%, which significantly reduced the impact on customers.

Global sorting of systems

The aim of the global sorting system is to advance the incident to the right team. The system coordinates across all individual agents through an orchestrator with multiple agents to identify the team that the incident should be a road. As shown in Figure 2, the Multi Agent orchestrator, who followed the Current Current Current, negotiates with each agent to find the right team and further reduce TTM. It is a similar approach to patients coming to the emergency room, where the nurse briefly evaluates symptoms and directs every patient to his specialist. Since we have developed a global sorting system, the agents will continue to expand their now and improve our AAAAAAAAAAAA-Libovnost, which significantly improves the user experience by quickly alleviating, but also improved developers’ productivity by reducing manual hard work.

Figure 2: Global Sorting Workflow

Looking forward to seeing

We plan to extend coverage by adding more agents from different teams that will expand the knowledge base to improve the system. The way we plan it is:

- Expand the incidence sorting system to work for all teams: By expanding the system to all teams, we liked to improve the overall knowledge of the system that allows it to handle wide range. Creating Unified Appsach to manage incidents would lead to a more efficient and consisting of incidental management.

- Optimize LLMS for quickly identifying and recommending solutions by repairing logs of errors using specific segments of code responsible for the problem: Optimization of LLMS for quick identification, correlation and recommendation of solutions absurdly speeds up the process of failure. It allows the system to provide accurate recommendations and reduce time engineers who spend tuning and lead to faster customer solutions.

- Expand the automatic alleviation of the friend from: Implementation of an automated system to alleviate known problems will reduce TTM improvement in customer experience. This will also reduce the number of incidents that require manual intervention, allowing engineers to focus on the pleasure of customers.

We first introduced AIOPS as part of this blog series in February 2020, where we emphasized how the integration of the Azure and Devops cloud platform increases the processes, resistance and efficiency through key solutions, include hardware failure, preliminary private services. Management. AIOPS continuously plays a critical role today in predicting, protection and alleviating failure and impact on Azure platform and improving customer experience.

By automating these processes, our teams are authorized to identify and solve and ensure high quality services for our customers. Organizations that want to strengthen their own livelihood and productivity can thus do the integration of AI agents into their processes to manage incidents designed in the triangle system. Read the triangle: Enhancement of incidental sorting with multi-lllm-agents paper from Microsoft Research.

Thank you Azure Core Insights and the M365 team for their contributions to this blog: Alison Yao, data scientists; Madhura Vaidya, software engineer; Chrysmine Wong, technical program manager; Li, the main manager of scientists; Sarvani Sathish Kumar, Chief Manager of Technical Program; Murali chintalapati, manager of software engineering of partner groups; Minghua Ma, Head of Researcher; and Chetan Bansal, main research manager of SR.